Ever heard of it? It’s an Android SIP client, the best to my opinion, that ports the PJSIP stack to Android devices and adds to it a really good-looking user interface.

Four months ago, I started studying Android programming and the love for it didn’t take long to come. Learning it was relatively easy because of my background on Java, but it is a complicated platform with so much diversity that it will take long before I could say that I fully understand everything.

Creating a custom version of Csipsimple has been in my TO-DO list since more than a year. I first tried to rebrand it but I had no idea how to do it and I even downloaded the code and took a look on it. It was Chinese to my eyes.

Finally, I decided to study Android and the result of it is a work in progress which is looking quite good and promising to be a great product for ng-voice, the company I work for.

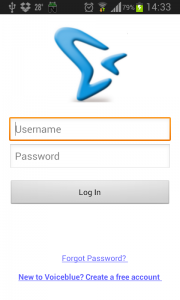

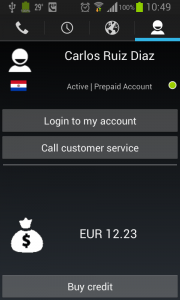

Below are a few screenshots of the product, and with this link you can get to the github project with a full list of features and things that makes the softphone different than the original csipsimple.